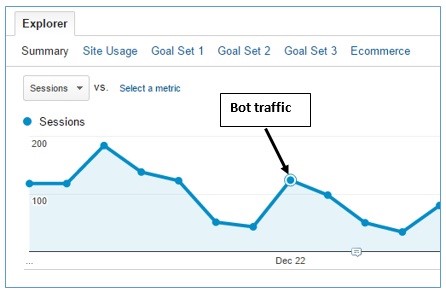

Very often, we see a surge in website traffic by analyzing the data collected by Google Analytics. This is perceived as an interest in the resource. And, of course, such an increase in attendance cannot but rejoice.

But this is not always a cause for joy. We later discover that most of this referral traffic was sent from spammers. It is spam that has become a big problem lately.

Referral spam occurs when your site receives fake traffic directions from spambots. This traffic fake is recorded by Google Analytics. If you see traffic from spam sources in Analytics, you need to take certain steps to eliminate this data from statistics.

What is a bot?

It is customary to call bots programs whose task is to perform repetitive tasks with maximum speed and accuracy.

The traditional use of bots is web indexing of the content of Internet resources, which is regularly carried out by search engines. But bots can also be used for malicious purposes. For example, for:

- committing click fraud;

- accumulation of e-mail addresses;

- transferring the content of websites;

- distribution of malicious software;

- artificially inflating resource traffic.

By analyzing the tasks for which bots are used, we can divide them into safe and dangerous.

Dangerous and safe bots

An example of a good bot is Googlebot, which Google uses to crawl and index web pages on the Internet. Most bots (whether safe or unsafe) do not execute JavaScript, but some do.

Search bots that execute Javascript (like Google analytics code) show up in Google Analytics reports and skew traffic metrics (direct traffic, referral traffic) and other session-based metrics (bounce rate, conversion rate, etc.).

Search bots that do not execute JavaScript (such as Googlebot) do not tamper with the above data. But their visits are still recorded in the server logs. They also consume server resources, degrade bandwidth, and can negatively impact website loading speed.

It’s impossible to say for sure which dangerous bots can tamper with Google analytics data and which can’t. Therefore, it is worth considering all dangerous bots as a threat to data integrity.

Spambots

As the name implies, the main task of these bots is spam. They visit a huge number of web resources every day, sending HTTP requests to sites with fake referrer headers. This allows them to avoid detection as bots.

The spoofed referrer header contains the URL of the website that the spammer wants to promote or receive backlinks.

When your site receives an HTTP request from a spam bot with a fake referrer header, it is immediately written to the server log. If your server log is publicly available, then it can be crawled and indexed by Google. The system treats the referrer value in the server log as a backlink, which ultimately affects the ranking of the website promoted by the spammer.

Recently, Google’s indexing algorithms have been built in such a way as not to take into account data from the logs. This negates the efforts of the creators of such bots.

Spambots capable of executing JavaScript are able to bypass the filtering methods used by Google Analytics. Thanks to this ability, this traffic is reflected in Google analytics reports.

Botnet

When a spam bot uses a botnet (a network of infected computers located locally or around the world), it can access a website using hundreds of different IP addresses. In this case, IP blacklisting or rate limiting (rate of traffic sent or received) becomes largely useless.

The ability of a spambot to distort traffic to your site is directly proportional to the size of the botnet that the spam bot uses.

With a large botnet with different IP addresses, a spam bot can access your website without being blocked by a firewall or other traditional security mechanism.

Not all spambots send referrer headers.

In this case, traffic from such bots will not appear as a source of referral traffic in Google Analytics reports. It looks like direct traffic, which makes it even more difficult to detect. In other words, whenever a referrer is not sent, this traffic is treated as direct in Google Analytics.

Spambot can create dozens of fake referrer headers.

If you have blocked one referrer source, spambots will send another fake to the site. Therefore, spam filters in Google Analytics or .htaccess do not guarantee that your site is completely blocked from spambots.

Now you know that not all spambots are dangerous. But some of them are really dangerous.

Very dangerous spambots

The goal of really dangerous spambots is not only to distort the traffic of your web resource, clean up the content, or get e-mail addresses. Their goal is to infect someone else’s computer with malware, to make your machine part of a botnet.

Once your computer is integrated into the botnet, it is used to send spam, viruses, and other malware to other computers on the Internet.

There are hundreds and thousands of computers all over the world that are used by real people, at the same time being part of a botnet.

There is a high chance that your computer is part of a botnet, but you are not aware of this.

If you decide to block a botnet, you are most likely blocking traffic from real users.

There is a chance that as soon as you visit a suspicious site from your referral traffic report, your machine becomes infected with malware. Therefore, do not visit suspicious sites from analytics reports without installing proper protection (anti-virus programs installed on your computer). It is preferable to use a separate machine specifically for visiting such sites. Alternatively, you can contact your system administrator to deal with this problem.

Smart spambots

Some spambots (like darodar.com) can send artificial traffic even without visiting your site. They do this by playing back the HTTP requests that come from the Google Analytics tracking code using your web resource ID. They can not only send you fake traffic, but also fake referrers. For example, bbc.co.uk. Since the BBC is a legitimate site, when you see this referrer on your report, you don’t even think that traffic coming from a reputable site might be fake. In fact, no one from the BBC has visited your site.

These smart and dangerous bots don’t need to visit your website or execute JavaScript. Since they don’t actually visit your site, these visits are not recorded in the server log.

And, since their visits are not recorded in the server log, you cannot block them by any means (blocking IP, user, referral traffic, etc.).

Smart spambots crawl your site looking for web property IDs. People who don’t use Google Tag Manager leave Google Analytics tracking code on their web pages.

The Google Analytics Tracking Code contains your Web Property ID. The ID is stolen by a smart spam bot and can be passed on to other bots for use. No one can guarantee that the bot that stole your web resource identifier and the bot that sends you artificial traffic are the same “person”.

You can fix this problem by using Google Tag Manager (GTM). Use GTM to track Google Analytics on your website. If your web resource ID has already been borrowed, then it is most likely too late to solve this problem. All you can do now is use a different ID or wait for Google to resolve the issue.

Not every site is attacked by spambots.

Initially, the task of spambots is to detect and use vulnerable sides of a web resource. They attack weakly protected sites. Accordingly, if you have placed your page on “budget” hosting or using a custom CMS, it has a high chance of being attacked.

Sometimes it is enough for a site that is often attacked by dangerous bots to change its web hosting. This easy way can really help.

How can you restrict your site from spambots?

Create an annotation on your graph and write a note explaining what caused the unusual traffic spike. It will be possible to discard this traffic during analysis.

Block referral spam using Spambot features. Add the following code to your .htaccess file (or web config if using IIS):

RewriteEngine On

Options +FollowSymlinks

RewriteCond %{HTTP_REFERER} ^https?://([^.]+\.)*buttons-for-website\.com\ [NC,OR]

RewriteRule .* – [F]

This code will block all HTTP and HTTPS destinations from buttons-for-website.com, including the buttons-for-website.com subdomains.

Block the IP address used by the spam bot. Take the .htaccess file and complete with the code shown below:

RewriteEngine On

Options + FollowSymlinks

Order Deny, Allow

Deny from 234.45.12.33

Note: There is no need to copy the code into your .htaccess – the scheme will not work. This is just an example of how to block an IP address in a .htaccess file.

Spambots are capable of using different IP addresses. Systematically add to the list of spambots IP addresses on your site.

Only block IP addresses that affect the site.

It is pointless to try to block every known IP address. Htaccess file will become very unwieldy. It will become difficult to manage and the performance of the web server will decrease.

Have you noticed that the number of blacklist IP addresses is growing rapidly? This is a clear sign of emerging security problems. Please contact your web hosting representative or system administrator. Use Google to find a blacklist for blocking IP addresses. Automate this work by writing a script that can independently find and deny IP addresses whose harmfulness is not questioned.

Take advantage of the ability to block IP ranges used by spam bots. When you are sure that a specific range of IP addresses is being used by a spam bot, you can block a number of IP addresses at once with one move, as shown below:

RewriteEngineOn

Options + FollowSymlinks

Denyfrom 76.149.24.0/24

Allow from all

Here 76.149.24.0/24 is the CIDR range (CIDR is the method used to represent address ranges).

Using CIDR blocking is more effective than blocking specific IP addresses, since it takes up a minimum of space on the server.

Block banned users using spambots. Analyze server log files weekly, detect and block malicious user agents using spambots. Once blocked, they will not be able to access the web resource. The ability to do this is shown below:

RewriteEngineOn

Options + FollowSymlinks

RewriteCond% {HTTP_USER_AGENT} Baiduspider [NC]

RewriteRule. * – [F, L]

Using a Google search box, you can get an impressive list of resources that maintain records of known banned user agents. Use this information to identify these user agents on your site.

The easiest way is to write a script to automate the entire process. Build a database with all known Banned User Agents. Use a script that will automatically identify and block them based on data from the database. Regularly update the database with new banned user agents – those appear with an enviable consistency.

Block only user agents that actually affect the resource. It is pointless to try to block every known IP address – this will make the .htaccess file too large and difficult to manage. Server performance will also decrease.

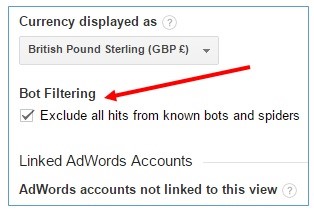

Use the “Bot Filtering” filter available in Google Analytics – “Exclude hits from known bots and spiders.”

Monitor server logs at least weekly. It is possible to start the fight against dangerous bots at the server level. Until you managed to “discourage” spambots from visiting your resource, do not exclude them from Google’s analytical reporting.

Use a firewall. Firewall will become a reliable filter between your computer (server) and virtual space. He is able to protect a web resource from dangerous bots.

Get expert help from your system administrator. Round-the-clock protection of client web resources from malicious objects is his main job. The person responsible for network security has a lot more tools to repel bot attacks than the site owner. If you find a new bot that threatens the site, immediately inform the system administrator about the find.

Use GoogleChrome to surf the web. In case no firewall is in use, it is best to use Google Chrome to browse the internet.

Chrome is also capable of detecting malicious software. At the same time, it opens web pages faster than other browsers, without forgetting to scan them for malware.

If you use Chrome, the risk of “picking up” malware on your computer is reduced. Even when you visit a suspicious resource from Google Analytics referral traffic reports.

Use custom alerts when monitoring unexpected traffic spikes. Personalized alerts in Google analytics will allow you to quickly detect and neutralize harmful bot requests, minimizing their harmful impact on the site.

Use the filters available in Google Analytics. To do this, on the “Administrator” tab in the “Views” column, select “Filters” and create a new one.

Conclusion

I hope that the above recommendations will help you get rid of all sources of spam on your site. This can be done in different ways, but we have described those that have helped many resources to protect their data in Google Analytics.

About the Author

Scott Butcher is an experienced SEO specialist at Essay Writer Free with over 5 years of experience in the industry. Scott enjoys exploring the latest in SEO and connecting with like-minded people.